In a nutshell

Given our decade-long experience using AI as part of how we serve clients,[1] we’ve been getting questions about the nature of AI and how it is likely to impact us. So we’ve written a trilogy of articles to explain the why, the how, and what to do about it as a CEO.

Will AI achieve general intelligence? Is there any limit to what AI can achieve?

Although it is perilous to attempt to predict the future, in this article we take stock of what we know today about the maximum limits that AI can achieve.

We will see that considerations from the fields of mathematics, philosophy of mind, and technology each impose some provable limits to the capabilities of AI and how close these capabilities can come to true intelligence.

We will also find that, paradoxically, this increases rather than decreases the probability of humans ending up in servitude to AI.

Understanding AI: Chinese rooms and typing monkeys

Today, when people talk about AI, they generally mean large language models (LLMs). Let’s start by explaining, in an entertaining but accurate manner, what LLMs are. This will help us understand what their limitations are.

Enter, the Chinese Room.

Chinese Rooms

The Chinese Room is a thought experiment.[2]

Imagine a man in a locked room who does not speak Mandarin Chinese. He does, however, have a dictionary and/or a set of rules (algorithm) in which he can look up any possible input text in Mandarin Chinese and which will give him a corresponding correct and intelligent output. Now, imagine a Chinese speaker holding a conversation with the Chinese room, by passing messages in Mandarin Chinese into the room through a slot. The answers generated from the dictionary are passed out of the room through the same slot.

The Chinese speaker thinks that he is having an intelligent conversation, in Mandarin Chinese, with the man in the room.

Can we then consider that the man in the room speaks Chinese? Can we consider that he is holding an intelligent conversation?

The man can be replaced by a computer. Can we then consider that this computer, which is blindly doing lookups in a dictionary has achieved intelligence?

Clearly not.

Consequently, being able to hold an apparently intelligent conversation is not sufficient to prove understanding and intelligence.

LLMs are Chinese Rooms

At the time that philosopher John Searle came up with this thought experiment, Chinese rooms did not exist.

Now they do.

LLMs are precisely that.

Technically, an LLM is a compressed dictionary / algorithm that, for every possible input, will provide a hopefully sensible and intelligent output.

Compression

The amount of storage space that would be necessary to be able to store a Chinese room dictionary is astronomical. In the case of LLMs, this issue is addressed via compression. The massive amounts of data on which the LLM is trained are compressed via neural networks.

However, this compression is not merely a pragmatic solution to a practical problem. It is also a core part of how this dictionary manages to appear intelligent.

Indeed, how does compression work? Compression works by finding patterns. Once a pattern is found, it can be used to express things more concisely.

Thus, compression is really pattern recognition. And this pattern recognition gives the LLM the ability to spot patterns, and consequently, to generalize from patterns.

Impressive!

But also dangerous, since, often, the pattern spotted will not correspond to any real pattern in the real world.[3]

Simplified example of how compression works

When a zip program compresses a file, it does so by finding regularities in the file.

Let’s say that it finds that every fifth bit is always a zero. This allows a basic (simplistic) way to compress the file: the compressed version can be all of the bits of the file except every fifth bit, plus an annotation stating that the fifth bit is a zero. This compresses large files by 20%.

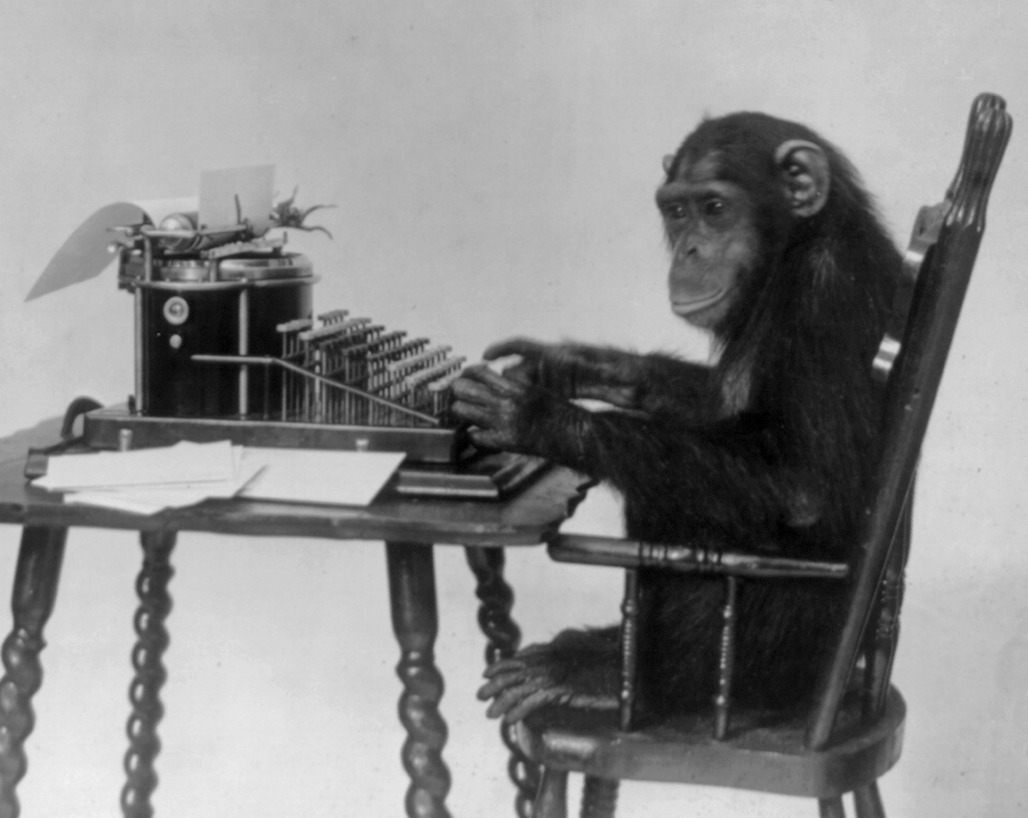

Typing monkeys

For reasons explained below, the *raw* output of such a system is not of very high quality. This is addressed via what I’ll call the typing monkeys approach.

Consider the thought experiment: is it possible for millions of monkeys typing completely randomly for millions of years to produce Shakespeare’s Hamlet?

If they have infinite time, then eventually they’ll end up exhausting all possible sequences of 26 letters and punctuation that have the same length as Hamlet. So, with infinite time, they will almost surely produce Hamlet.[4]

So we’ve proven that a mindless, completely random process can produce not only true intelligence, but absolute genius. Or have we?

Firstly, before we get too enthusiastic about the genius of monkeys and their ability to make Shakespeare redundant, it turns out that they will almost certainly need much, much longer than the lifespan of the universe.[5] (We will come back to this point.)

Secondly, at the end of this process, we are in fact not left with Shakespeare’s Hamlet. We are left with an astronomical number of texts, one of which is likely to be identical to Shakespeare’s Hamlet. In order to recover Shakespeare’s Hamlet, we need one more step, which is to go through these texts and identify which of them are texts of value and keep them, and get rid of the 99.999999…% which are garbage.

So, in order to finally obtain the work of genius from the labors of the monkeys, we need a process of intelligent filtering.

Such a filtering process is how LLMs obtain the polished output which we’re used to, from the raw output. Some of the filtering is done by humans, and some is automated. As an example of humans playing this role, during training, humans inspect the results in order to improve them. As an example of automated filtering, some automated systems use formal criteria (e.g. does this look like gibberish) to discard bad output. Noteworthy among automated systems, is to use the AI itself to judge its previous output, or to use another AI to judge the output, thus discarding lower-quality answers. In practice, this approach turns out to have its limits.

Computing power

This implies the need for a vast amount of computing power, for several reasons:

- The compression during training requires vast computing resources that increase very swiftly as the amount of data increases.

- The dictionary of the Chinese room requires a vast lookup facility whose computing needs increases swiftly as the dictionary size increases.

- The typing monkey’s filter requires an almost exponentially increasing amount of computing power.

The core point here is not so much that the LLM requires vast computing resources. This is fixable by technological progress and improvements in computing power. The real issue is how swiftly the computing needs increase as the LLM gets bigger and better. In practice, any problem whose computing needs increase as swiftly or more swiftly than a class of problems called NP-complete, and for which there isn’t a shortcut that gives a quick approximate answer, becomes, in practice, not solveable with limited resources. This is because the time needed to solve it grows exponentially with the size of the problem.[6] Training an LLM is even harder than NP-complete.[7]

This is a practical limit to the abilities of LLM technology that no amount of technological development, short of switching to a different technology,can resolve.[8] [9]

In practice, this means that there is:

- A practical cap to how fast LLM capabilities can improve

- A practical cap to how good LLMs can get, within the lifetime of the univers (remember the monkeys that need more than the lifespan of the universe…)

So, what is an LLM?

So, to summarize, an LLM is a Chinese room, plus compression, plus a typing monkey style filtering system, enabled by fast-growing computing resources.

Limits of LLMs

LLMs are not a form of intelligence

This has been proven in the Chinese Rooms section.

LLMs’ speed of development is capped

This has been proven in the Computing Power section.

LLMs’ max potential is capped

This has been proven in the Computing Power section.

There are hard limits to what an AI (any AI) can achieve: the “no free lunch” theorem

Is there a universally best learning / problem-solving approach (algorithm) that works better than others for every possible problem?

The No Free Lunch Theorem from optimization theory says no. [10]

This implies that any system for pattern recognition may work in some circumstances, but will not work in other circumstances. Since pattern recognition is the way that LLM-AI is capable of generalizing, this hard limit on the ability to generalize also puts a hard limit on the abilities of LLMs.

In other words, LLMs, being algorithms, can only work well on some problems and not on others, as per the No Free Lunch Theorem. In particular, there are limitations to their ability to generalize.[11]

Concretely, what are these limits?

Any new piece of information added to an existing body of knowledge can combine with the elements of the existing body of knowledge in a myriad of ways. The number of ways is proportional to the size of the body of knowledge.[12]

As we have seen, the No Free Lunch Theorem implies that an LLM AI’s ability to do use the new piece of information to generalize (i.e. detect new valid patterns) will, in the case of some of these applications, be quite successful, perhaps even genius.

However, in others, it will completely miss the mark.

Thus, the practical consequence of the new no-free-lunch theorem is that the AI’s ability to develop (generalize):

- Will be good in certain directions

- Will require human input, in other directions

Furthermore, this need for additional human input will grow exponentially as the AI develops. The more it develops, the more numerous (exponentially so) are the directions for generalization and the number of possible patterns, and consequently, the more numerous are the failures which require human intervention.

What about future, now still undreamed of types of AI

Aside from the No Free Lunch theorem, are there any hard limits that, in future, artificial intelligence can never breach, however much technology advances?

A review of the academic literature shows that this point has already been conclusively addressed. For instance, see Ross (1992), and Feser (2013).

The arguments in the papers cited above (and others) are a bit difficult to follow due to their rigour and technicality. So, instead of seeking to outline the entire argument, I will try to give an intuitive flavor of what they are saying.

Consider a modern computer. Is it capable of doing addition? Contrary to appearance, the answer is no. Indeed, there are many cases where the computer will get th answer wrong. This is frequent enough that Microsoft felt obliged to write an article explaining why Excel sometimes gets it wrong (see here).[13]

The computer merely simulates addition.

But is this merely a limitation of the current state of technology? Or is it a hard limit? Is it possible, in theory, for the technology to evolve enough for computers to one day to addition?

It is trivially true that an algorithm exists that perfectly instantiates addition. However, this is not the question we are asking. The question is whether a material system, a purely material system, can perfectly instantiate addition.

Consider the thought experiment: at some far future date, we are testing the capabilities of two computers. We find that when we input two numbers into Computer 1, it provides precisely the sum of these two numbers, whatever the input. As for Computer 2, for any inputs up until a certain number X, it provides the sum of the inputs. Beyond that, it provides another answer with some interesting patterns.

Is it possible, purely from inspection of the material properties of Computer 2, to determine whether it is malfunctioning, or whether it is in fact intended to behave this way? Purely from inspection of the material properties of the computer, without any additional knowledge, we cannot demonstrate that it is malfunctioning. For instance, perhaps there are certain engineering applications to this quasi-sum. Or perhaps it is being used to model some physical process which has analogous properties.[14]

The answer is, therefore, no.

In that case, can we purely by physical inspection of Computer 1 prove that it is correctly functioning, given that when we provide two inputs it provides the sum of both inputs? The answer here also is no. The only reason why we believe it is correctly functioning is because we understand the concept of addition. In other words, we are importing our own knowledge and projecting it onto the computer. From purely inspecting its material properties, there is no way for us to know that it is correctly functioning. Perhaps it is intended to function like Computer 2 but there was a manufacturing glitch.[15]

Consequently, it is impossible for a purely material system to fully instantiate a concept such as addition. Consequently, it is impossible for a purely material system to achieve true intelligence. [16]

Conclusion: the limits of AI

In conclusion, on the strength of the no free lunch theorem alone, we already know that the more an AI develops, the more directions of generalization it will have available to it, and the more of these directions for generalization will result in failure. These failures increase exponentially and can only be surmounted by an external input, presumably human.

Furthermore, on the strength of the intrinsic limits to any technological material system, such a system will never reach the capabilities of the human mind.

Implication: AI even more likely to dominate humanity

It is natural to assume that it is, if AI achieves true intelligence, that it is likely to dominate humanity.

Is this really so?

Consider the consequences of the above described limitations on the likelihood of AI dominating humanity.

Once the AI has been shown by a human being how to generalize in order to resolve a particular problem, it will become capable of addressing this problem more cheaply than than human labor. Consequently, there is a tremendous economic incentive to continue to develop AI more and more until some economic margin of diminishing returns is reached. However, as the AI gets more and more complex, a larger and larger proportion of its generalizations will miss the mark. As this is exponentially increasing, it will not be economically viable to address these, and it will be cheaper to simply assume, in many cases, that the AI has generalized well enough for practical needs.

Consequently, we will be living in a world where AI is relied upon more and more to make decisions precisely as it becomes less and less reliable for making correct decisions.

Thus, we are left with an even worse scenario than that of a smart AI dominating humanity: the scenario of a stupid AI dominating humanity, because as the infrastructure of much of society’s decision making has been cheaply offloaded onto it. Note that humanity participates willingly in this scenario.

The (rotten) cherry on the pie is that nothing in the limitations to AI that we have reviewed prevents an AI from ending up programmed to seek to dominate human beings. This can even happen by mistake. Programming feedback loops to generate goal-directed behavior is easy, and does not require intelligence.

Thus, we are left with the scenario of humanity in servitude to a stupid AI.

But it gets worse. SInce the latter “Servitude” scenario would likely be hard for us humans to distinguish from the previous “Infrastructure” scenario, it would be unlikely to raise alarm bells among us.

Thus, we are left with the nightmare scenario: the scenario of a stupid AI that dominates a willing humanity.

I am in no way saying that this will happen. The sole conclusion I wish to draw is that a stupid AI can be more dangerous than a smart AI. In my personal opinion, the bigger danger will not be the societal danger touched upon in this section, but the one-on-one psychological impact that over-reliance on AI will have on adults, and on the devlopment of social and thinking skills of children. Skills such as independent thinking are just that, skills. Use it or lose it.

References

Abrahamsen, M., Kleist, L., Miltzow, T. (2021). Training Neural Networks is \exists\mathbb R-complete. arXiv preprint arXiv:2102.09798. https://arxiv.org/pdf/2102.09798

Feser, Edward (2013). Kripke, Ross, and the Immaterial Aspects of Thought. American Catholic Philosophical Quarterly, Vol. 87, No. 1, pp. 1–32.

Froese, V., Hertrich, C. (2023). Training Neural Networks is NP-Hard in Fixed Dimension. arXiv preprint arXiv:2303.17045. https://arxiv.org/pdf/2102.09798

Klima, G. (2009), Aquinas on the Materiality of the Human Soul and the Immateriality of the Human Intellect. Philosophical Investigations, 32: 163-182.

Kripke, S. A. (1982). Wittgenstein on rules and private language: An elementary exposition. Harvard University Press.

Ross, James (1992). Immaterial Aspects of Thought. Journal

of Philosophy 89 (1992): 136–50.

Searle, John (1980), “Minds, Brains and Programs”, Behavioral and Brain Sciences, 3 (3): 417–457.

Stephen Woodcock, Jay Falletta (2024). A numerical evaluation of the Finite Monkeys Theorem. Franklin Open, Volume 9, 2024, 100171.

-

Example 1: in the context of the acquisition and turnaround of a near-bankrupt company, cash flow was the most important consideration. As a result, the ability to peer forward into the future, even if only by a few days, in order to predict future revenue, constituted a decisive advantage. We achieved this using AI.

Example 2. In the context of negotiations with our client’s suppliers, we faced a difficulty. There weren’t all that many alternatives to the suppliers that we were negotiating with. In order to strengthen our negotiation position, we needed to find more potential suppliers capable of manufacturing the same products, but who were not advertising the fact. We used AI to identify other factories which had such capabilities.

Example 3. During the COVID pandemic, we created a system to dynamically update the relevant policies, based on a cost-benefits analysis that was updated in real-time to take into account the latest incoming data (such as covid cases inthe organization and where they appeared).

↩︎ -

Devised by John Searle (1980), to demonstrate the limits of an artificial intelligence. ↩︎

-

Much of machine learning / AI is devoted to addressing this issue. For instance, that is why holdout sets will be withdrawn from training data and used to test the AI. If the AI gets the holdouts right, then it will suggest that it identified the real patterns. If, however, it gets the holdouts wrong, then it implies that the patterns identified by the AI do not exist in the real world. ↩︎

-

This is the Infinite Monkey Theorem. ↩︎

-

See Woodcock & Falletta (2024). Entertainingly, an experiment with real monkeys resulted mainly in broken keyboards and defacating.. ↩︎

-

This is based on the reducibility in polynomial time of the 3-SAT probelm into any other NP-complete problem. The 3-SAT problem is believed to require exponential time, as per the Exponential Time Hypothesis. Therefore, any other problem needs to require at least subexponential time to resolve. Otherwise, the 3-SAT problem could be resolved in faster-than-exponential time. ↩︎

-

The intuitive reason why LLMs require near-exponential time is explained in section Concretely, what are these limits. More formally: the problem of whether, for a given neural network, there is a set of weights that correctly classifies training examples, within a certain tolerance, is known to be an NP-hard problem (Froese & Hertrich (2024), Abrahamsen, Kleist & Miltzow (2021)). ↩︎

-

Unless the complexity theory conjecture that P≠NP turns out to be wrong. Mathematicians consider this to be very unlikely. ↩︎

-

Paradoxically, this also implies that breakthroughs in technology are likely to occur, that greatly increase the computational efficiency of AIs in most domains. This is the paradox of NP-complete problems: heuristics can usually be found, but these can’t apply to all inputs. Furthermore, once these breakthroughs are achieved, we are back to the long, hard slog. ↩︎

-

That is the intuitive meaning. Formally, the No Free Lunch Theorem says:

when averaged over all possible problems, every optimization algorithm performs equally well. Note that learning algorithms are a subcategory of optimization algorithms. ↩︎ -

It could be argued that this limitation also applies to human thinking. However, this assumes that human beings only use one or a small number of algorithms. This is a highly debatable assumption. Strong evidence against it can be drawn from the plethora of different types of AI technology, each of which is radically different from all of the others, and each of which drew inspiration from some mode or other of human thinking. This is not to say that human thinking does not have limitations.

A critic could further argue that this implies that a large enough number of different types of AI could break out of the limitations of LLMs. This is likely true. It raises the question of whether this coordinated combination of AIs would itself encounter limitations. This will be answered below. We will also shed light on the deeper question of whether the same limits also apply to human intelligence.

Note, however, that no AI system or combination of systems can break out from the consequences of the no free lunch theorem, and none can break out from the consequences of the Chinese room analysis. ↩︎ -

As a result, the algorithm is near-exponential as a function of the size of its inputs. ↩︎

-

This can be either due to truncation, or floating-point arithmetic, or reaching the end of the buffer, or whatever other reason. ↩︎

-

Far from this being far-fetched, there are known physical processes that behave like addition for small values, but eventually diverge from addition. An example is the Lorentz transformation. ↩︎

-

This example is inspired from the analogous example of the quus operation by Kripke (1982). ↩︎

-

What does this say about human intelligence? This is a hot issue in philosphy of mind. The responses generally fall into three categories:

1/ Purely materialist: “The human mind is a purely material system. As demonstrated, material systems cannot instantiate concepts. Therefore, the human mind cannot instantiate concepts. We merely have the illusion that we are reasoning.” The problem with such a line of reasoning is that, if its conclusion is that we cannot reason, then this line of reasoning is necessarily invalid. Also, this approach is unable to explain qualia (subjective experience), without work-arounds such as “emergeant properties” which bring it closer to category 3.

2/ Cartesian dualism: “both matter and non-matter exist. Mind is non-matter.” This conception, which arose from Descartes, has difficulty explaining how the non-matter can act on the matter.

3/ Hylomorphism: this is a moderate position between the above two. “Common sense gets it right most of the time, and if philosophical reasoning is out-of-line with common sense, there is probably a reasoning error in the philosophy. Here, we need to avoid both the extremes of simplistic materialism and of wishy-washy dualism. Humans have a form, which is what makes them human. This is similar to how a hoop has a form (its shape and configuration), that makes it a hoop. In both cases, the form is material in the sense that it exists through the matter that is configured into that form. Thanks to the matter of the hoop being formed into the form of a hoop, we have a hoop. Of course, the form itself is more akin to a concept than to matter, so there is also a sense in which it is immaterial. Hence we’ve reconciled both poles of dualism, grounding the immaterial in the material. So far, both hoop and human are similar. The similarity ends in that the form of the hoop does not have its own existence. It exists only to the extent that it is instantiated in the hoop. By contrast, by a line of reasoning similar to that described in the text (see Klima, G. (2009)), the form of a human, in order to be able to grasp concepts, must exist in its own right. Consequently, the form of a human has both material and immaterial aspects, and exists in its own right.” Note how this conception grounds mind in matter, while still giving it an existance of its own. It originated with Aristotle and was developed by Aquinas, and is unpopular nowadays because the translation of the latin word for the form of a human (anima) is “soul”. Incidentally, this approach is the official position of the Catholic Church, which, for some, discredits this philosophical approach. However, such considerations should not be relevant in the scientific discourse.) ↩︎